APIs and MCPs both help systems talk to each other.

At first, they might look the same. Both allow one piece of software to ask another for data or perform an action. But the way they work and the reason they exist are completely different.

An API, or Application Programming Interface, is built for developers. It is how one program communicates with another. MCP, or Model Context Protocol, is built for AI models. It is how a large language model like GPT or Claude can safely talk to external systems and use tools.

Let’s look at what makes them different, why MCP exists when APIs already do the job, and how they work in real examples.

What is an API

An API is a set of rules that lets software talk to software.

It is like a waiter in a restaurant. You tell the waiter what you want, the kitchen prepares it, and the waiter brings it back. You never go into the kitchen yourself.

For example, if you want to get details of a GitHub user, you can make a simple API request.

GET https://api.github.com/users/usernameThe server replies with a response like this:

{

"login": "john",

"id": 12345,

"followers": 120,

"repos": 42

}The API follows a pattern that both the client and the server understand. Developers use APIs every day to connect systems like payment gateways, weather data, or user accounts.

APIs are built for humans to code against. A developer writes the logic, sends requests, handles errors, adds authentication, and decides what to do with the response.

What is MCP

MCP stands for Model Context Protocol. It is a new standard that allows AI models to interact with external tools, data, and systems in a safe, structured way.

MCP is not meant for developers directly. It is meant for large language models.

An AI model cannot make network requests by itself. It does not know how to use headers, tokens, or API formats. It just predicts text based on what you type.

So if you tell a model, “Get the weather for Delhi,” it might generate some text that looks like a Python request. But it cannot actually run that code.

That is where MCP comes in. MCP acts like a bridge between the AI model and the real world. It defines a set of “tools” that the model can use safely.

Each tool is described using a schema so that the model knows what the tool does, what inputs it takes, and what it returns.

How MCP Works

You can think of MCP as a server that runs in the background. It exposes tools that an AI model can call. Each tool is a small piece of code that performs an action.

For example, you can write a simple MCP server in Python like this:

from mcp.server.fastmcp import FastMCP

import requests

mcp = FastMCP(name="github-tools")

@mcp.tool()

def get_repos(username: str):

"""Fetch public repositories for a user"""

url = f"https://api.github.com/users/{username}/repos"

return requests.get(url).json()

mcp.run()This server defines a single tool called get_repos. It takes a username and fetches their GitHub repositories using the GitHub API.

Now, if an AI model is connected to this MCP server, it can ask for “get_repos for user john” and receive the data. The model does not know or care about the actual URL, headers, or tokens. The MCP server handles that part.

Why Not Just Use an API

You might wonder, why not just let the AI model call the API directly? If the model can talk to APIs, why add another layer?

The short answer is that AI models cannot safely call APIs on their own. They have no built-in execution environment, no way to store secrets, and no limits.

Letting a model make arbitrary network requests would be dangerous. It could expose keys, access private data, or even cause damage by mistake.

MCP solves that problem by creating a controlled layer between the model and your systems. You decide which tools the model can use. You can restrict inputs, filter outputs, and monitor everything the model does.

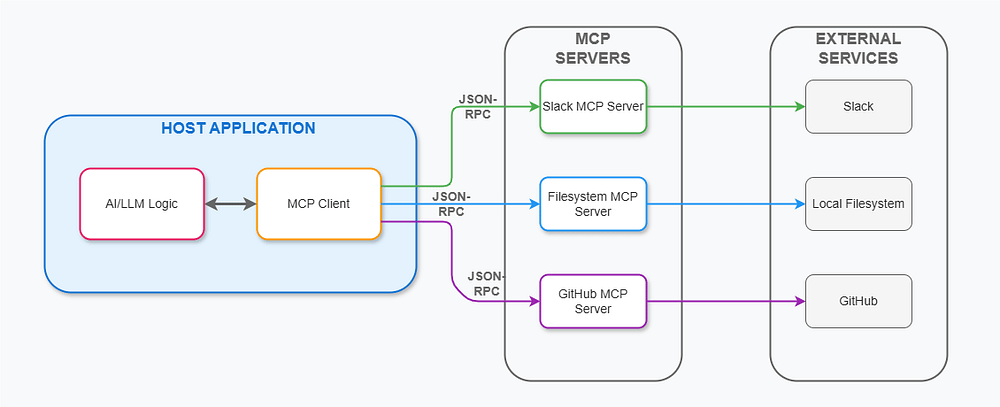

MCP Architecture

In an MCP setup, the model never sees API keys or sensitive URLs. It just calls a tool that you define. The tool itself handles the network call and returns only the safe data.

This makes MCP much safer for real-world use, especially in enterprise or private environments.

MCP vs API in Practice

Let’s take a simple example. Suppose you want an AI to fetch weather data.

If you were using an API, you might write code like this:

import requests

response = requests.get("https://api.weatherapi.com/v1/current.json?key=API_KEY&q=Delhi")

print(response.json())That works fine if a human developer runs it. But if an AI model tried to do the same, it would need access to your API key, network, and code execution. That is unsafe.

With MCP, you can define a tool like this:

@mcp.tool()

def get_weather(city: str):

"""Get weather for a city"""

import requests

url = f"https://api.weatherapi.com/v1/current.json?key=API_KEY&q={city}"

return requests.get(url).json()Now the AI model can simply say, “Call get_weather with city=Delhi,” and the MCP server runs the function.

The model does not see the API key or the actual URL. It just uses the tool safely.

Key Conceptual Difference

The difference between MCP and API is not just technical. It is philosophical.

APIs are for humans to use directly. They assume the caller understands the system, can handle tokens, and knows how to format requests.

MCP is for AI models. It assumes the caller is an intelligent but untrusted system that cannot hold secrets or execute code. The protocol gives the model only what it needs to perform reasoning and tool usage.

So while APIs expose endpoints like /users or /weather, MCP exposes capabilities like “get_user_info” or “get_weather.” The AI model does not call URLs. It calls functions with typed parameters.

Discovery and Schema

Another big advantage of MCP is that it can tell the model what tools are available.

When an AI model connects to an MCP server, it can ask for a list of tools. The server replies with their names, descriptions, and parameters in a structured format.

For example, the model might receive something like this:

{

"tools": [

{

"name": "get_weather",

"description": "Get weather for a city",

"parameters": {

"city": {"type": "string"}

}

}

]

}This means the model does not need separate documentation or prompt tuning. It knows exactly how to call each tool.

In contrast, an API would require reading human-written docs, copying sample requests, and guessing formats.

Security and Privacy

MCP provides better control over what the model can do.

Since the tools are defined in your server, you can add rules, limits, and validations. You can prevent the model from sending dangerous inputs or accessing private data.

For example, your tool can reject requests that ask for too much data or contain suspicious patterns. You can also log every call for audit purposes.

APIs, on the other hand, are exposed over the internet. If an API key leaks or a model calls the wrong endpoint, you could face a data breach.

With MCP, everything can run locally, behind a firewall, or on a private network. The model never needs direct access to the outside world.

The Future of MCP

Big AI companies like OpenAI and Anthropic are adopting MCP as a shared standard. That means any model that supports MCP can use your tools without modification.

If you build a weather MCP server today, it could work with GPT, Claude, or any other MCP-compatible model in the future.

This makes MCP a unifying layer between AI systems and external tools, much like APIs were for web applications.

Conclusion

At a glance, MCP and API might seem similar because both pass data between systems. But the difference is who they are built for.

APIs are built for developers and systems that can safely make network calls. MCP is built for AI models that reason with text but cannot safely execute code.

An API gives you endpoints to access data. MCP gives the AI tools to use that data safely.

Think of it this way. APIs connect machines. MCP connects intelligence to machines.

That is why MCP is not replacing APIs but sitting above them as a new layer. APIs will still provide the data. MCP will just make it possible for AI to use those APIs safely, with structure, control, and understanding.